Active Inference Demo: T-Maze Environment

Author: Conor Heins

This demo notebook provides a full walk-through of active inference using the Agent() class of pymdp. The canonical example used here is the ‘T-maze’ task, often used in the active inference literature in discussions of epistemic behavior (see, for example, “Active Inference and Epistemic Value”)

Note: When running this notebook in Google Colab, you may have to run !pip install inferactively-pymdp at the top of the notebook, before you can import pymdp. That cell is left commented out below, in case you are running this notebook from Google Colab.

# ! pip install inferactively-pymdp

Imports

First, import pymdp and the modules we’ll need.

import os

import sys

import pathlib

import numpy as np

import copy

from pymdp.agent import Agent

from pymdp.utils import plot_beliefs, plot_likelihood

from pymdp import utils

from pymdp.envs import TMazeEnv

Auxiliary Functions

Define some utility functions that will be helpful for plotting.

Environment

Here we consider an agent navigating a three-armed ‘T-maze,’ with the agent starting in a central location of the maze. The bottom arm of the maze contains an informative cue, which signals in which of the two top arms (‘Left’ or ‘Right’, the ends of the ‘T’) a reward is likely to be found.

At each timestep, the environment is described by the joint occurrence of two qualitatively-different ‘kinds’ of states (hereafter referred to as hidden state factors). These hidden state factors are independent of one another.

The first hidden state factor (Location) is a discrete random variable with 4 levels, that encodes the current position of the agent. Each of its four levels can be mapped to the following values: {CENTER, RIGHT ARM, LEFT ARM, or CUE LOCATION}. The random variable Location taking a particular value can be represented as a one-hot vector with a 1 at the appropriate level, and 0’s everywhere else. For example, if the agent is in the CUE LOCATION, the current state of this factor would be $s_1 = \begin{bmatrix} 0 & 0 & 0 & 1 \end{bmatrix}$.

We represent the second hidden state factor (Reward Condition) is a discrete random variable with 2 levels, that encodes the reward condition of the trial: {Reward on Right, or Reward on Left}. A trial where the condition is reward is Reward on Left is thus encoded as the state $s_2 = \begin{bmatrix} 0 & 1\end{bmatrix}$.

The environment is designed such that when the agent is located in the RIGHT ARM and the reward condition is Reward on Right, the agent has a specified probability $a$ (where $a > 0.5$) of receiving a reward, and a low probability $b = 1 - a$ of receiving a ‘loss’ (we can think of this as an aversive or unpreferred stimulus). If the agent is in the LEFT ARM for the same reward condition, the reward probabilities are swapped, and the agent experiences loss with probability $a$, and reward with lower probability $b = 1 - a$. These reward contingencies are intuitively swapped for the Reward on Left condition.

For instance, we can encode the state of the environment at the first time step in a Reward on Right trial with the following pair of hidden state vectors: $s_1 = \begin{bmatrix} 1 & 0 & 0 & 0\end{bmatrix}$, $s_2 = \begin{bmatrix} 1 & 0\end{bmatrix}$, where we assume the agent starts sitting in the central location. If the agent moved to the right arm, then the corresponding hidden state vectors would now be $s_1 = \begin{bmatrix} 0 & 1 & 0 & 0\end{bmatrix}$, $s_2 = \begin{bmatrix} 1 & 0\end{bmatrix}$. This highlights the independence of the two hidden state factors. In other words, the location of the agent ($s_1$) can change without affecting the identity of the reward condition ($s_2$).

Initialize environment

Now we can initialize the T-maze environment using the built-in TMazeEnv class from the pymdp.envs module.

Choose reward probabilities $a$ and $b$, where $a$ and $b$ are the probabilities of reward / loss in the ‘correct’ arm, and the probabilities of loss / reward in the ‘incorrect’ arm. Which arm counts as ‘correct’ vs. ‘incorrect’ depends on the reward condition (state of the 2nd hidden state factor).

reward_probabilities = [0.98, 0.02] # probabilities used in the original SPM T-maze demo

Initialize an instance of the T-maze environment

env = TMazeEnv(reward_probs = reward_probabilities)

Structure of the state –> outcome mapping

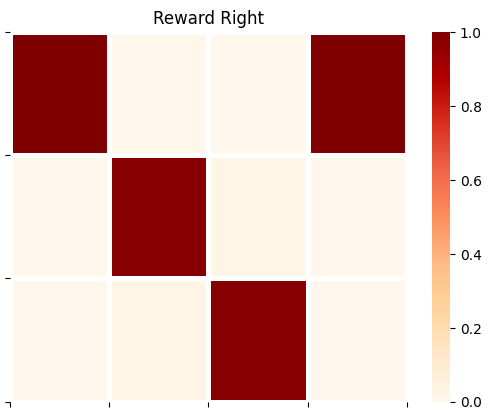

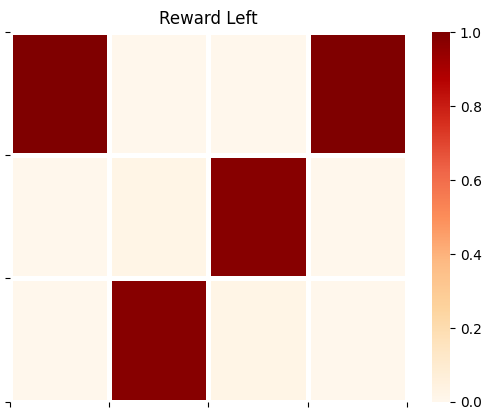

We can ‘peer into’ the rules encoded by the environment (also known as the generative process ) by looking at the probability distributions that map from hidden states to observations. Following the SPM version of active inference, we refer to this collection of probabilistic relationships as the A array. In the case of the true rules of the environment, we refer to this array as A_gp (where the suffix _gp denotes the generative process).

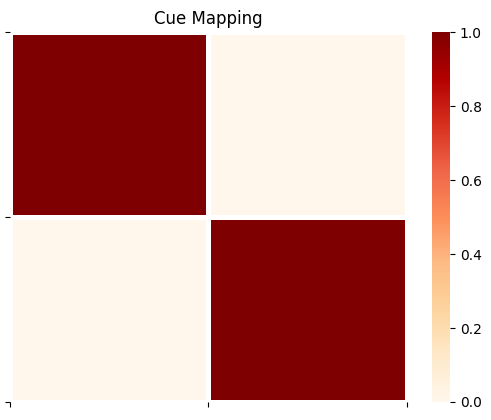

It is worth outlining what constitute the agent’s observations in this task. In this T-maze demo, we have three sensory channels or observation modalities: Location, Reward, and Cue.

The

Locationobservation values are identical to theLocationhidden state values. In this case, the agent always unambiguously observes its own state - if the agent is inRIGHT ARM, it receives aRIGHT ARMobservation in the corresponding modality. This might be analogized to a ‘proprioceptive’ sense of one’s own place.The

Rewardobservation modality assumes the valuesNo Reward,RewardorLoss. TheNo Reward(index 0) observation is observed whenever the agent isn’t occupying one of the two T-maze arms (the right or left arms). TheReward(index 1) andLoss(index 2) observations are observed in the right and left arms of the T-maze, with associated probabilities that depend on the reward condition (i.e. on the value of the second hidden state factor).The

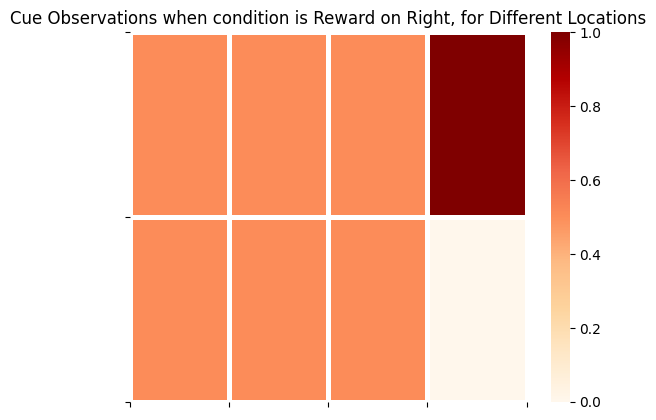

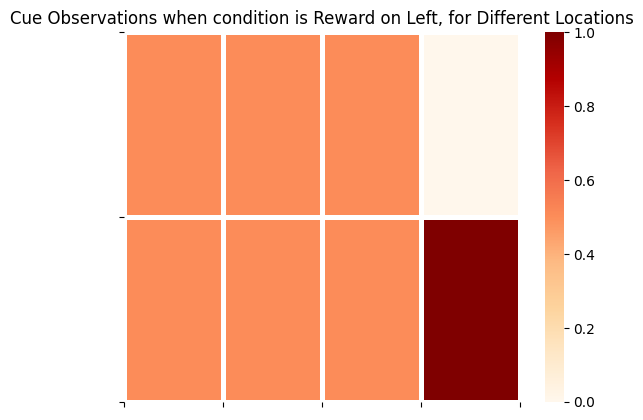

Cueobservation modality assumes the valuesCue Right,Cue Left. This observation unambiguously signals the reward condition of the trial, and therefore in which arm theRewardobservation is more probable. When the agent occupies the other arms, theCueobservation will beCue RightorCue Leftwith equal probability. However (as we’ll see below when we intialise the agent), the agent’s beliefs about the likelihood mapping render these observations uninformative and irrelevant to state inference.

In pymdp, we store the set of probability distributions encoding the conditional probabilities of observations, under different configurations of hidden states, as a set of matrices referred to as the likelihood mapping or A array (this is a convention borrowed from SPM). The likelihood mapping for a single modality is stored as a single multidimensional array (a numpy.ndarray) A[m] with the larger likelihood array, where m is the index of the corresponding modality. Each modality-specific A array has num_obs[m] rows, and as many lagging dimensions (e.g. columns, ‘slices’ and higher-order dimensions) as there are hidden state factors. num_obs[m] tells you the number of observation values (also known as “levels”) for observation modality m.

# here, we can get the likelihood mapping directly from the environmental class. So this is the likelihood mapping that truly describes the relatinoship between the

# environment's hidden state and the observations the agent will get

A_gp = env.get_likelihood_dist()

plot_likelihood(A_gp[1][:,:,0],'Reward Right')

plot_likelihood(A_gp[1][:,:,1],'Reward Left')

plot_likelihood(A_gp[2][:,3,:],'Cue Mapping')

Transition Dynamics

We represent the dynamics of the environment (e.g. changes in the location of the agent and changes to the reward condition) as conditional probability distributions that encode the likelihood of transitions between the states of a given hidden state factor. These distributions are collected into the so-called B array, also known as transition likelihoods or transition distribution . As with the A array, we denote the true probabilities describing the environmental dynamics as B_gp. Each sub-matrix B_gp[f] of the larger array encodes the transition probabilities between state-values of a given hidden state factor with index f. These matrices encode dynamics as Markovian transition probabilities, such that the entry $i,j$ of a given matrix encodes the probability of transition to state $i$ at time $t+1$, given state $j$ at $t$.

# here, we can get the transition mapping directly from the environmental class. So this is the transition mapping that truly describes the environment's dynamics

B_gp = env.get_transition_dist()

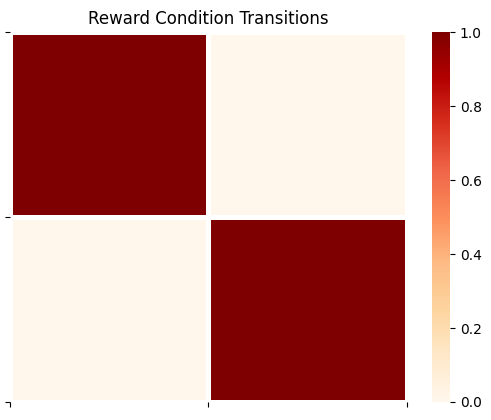

For example, we can inspect the ‘dynamics’ of the Reward Condition factor by indexing into the appropriate sub-matrix of B_gp

plot_likelihood(B_gp[1][:,:,0],'Reward Condition Transitions')

The above transition array is the ‘trivial’ identity matrix, meaning that the reward condition doesn’t change over time (it’s mapped from whatever it’s current value is to the same value at the next timestep).

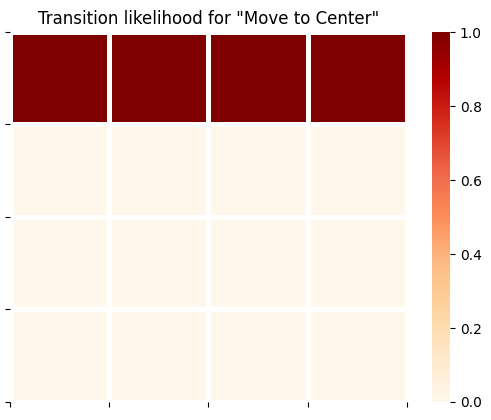

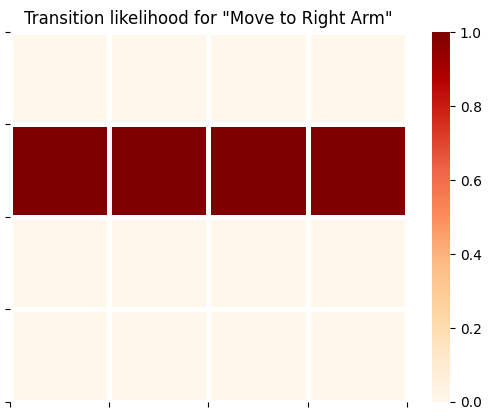

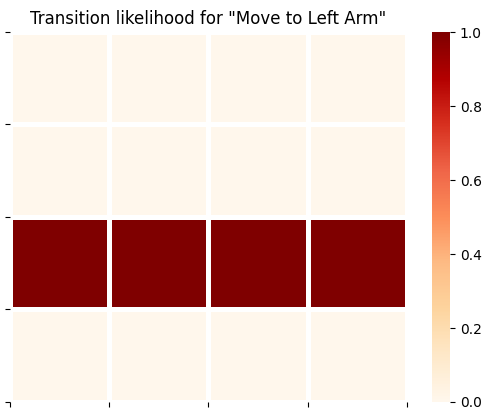

(Controllable-) Transition Dynamics

Importantly, some hidden state factors are controllable by the agent, meaning that the probability of being in state $i$ at $t+1$ isn’t merely a function of the state at $t$, but also of actions (or from the agent’s perspective, control states ). So now each transition likelihood encodes conditional probability distributions over states at $t+1$, where the conditioning variables are both the states at $t-1$ and the actions at $t-1$. This extra conditioning on actions is encoded via an optional third dimension to each factor-specific B array.

For example, in our case the first hidden state factor (Location) is under the control of the agent, which means the corresponding transition likelihoods B[0] are index-able by both previous state and action.

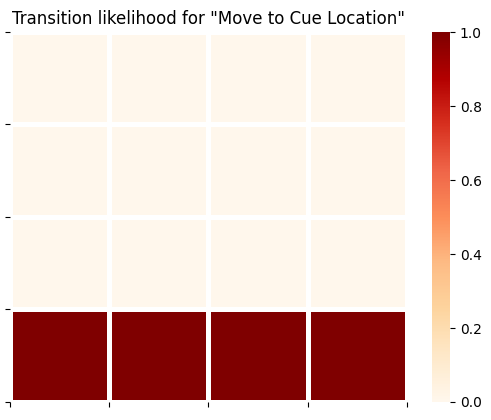

plot_likelihood(B_gp[0][:,:,0],'Transition likelihood for "Move to Center"')

plot_likelihood(B_gp[0][:,:,1],'Transition likelihood for "Move to Right Arm"')

plot_likelihood(B_gp[0][:,:,2],'Transition likelihood for "Move to Left Arm"')

plot_likelihood(B_gp[0][:,:,3],'Transition likelihood for "Move to Cue Location"')

The generative model

Now we can move onto setting up the generative model of the agent - namely, the agent’s beliefs or assumptions about how hidden states give rise to observations, and how hidden states transition among eachother.

In almost all MDPs, the critical building blocks of this generative model are the agent’s representation of the observation likelihood, which we’ll refer to as A_gm, and its representation of the transition likelihood, which we’ll call B_gm.

Here, we assume the agent has a veridical representation of the rules of the T-maze (namely, how hidden states cause observations) as well as its ability to control its own movements with certain consequences (i.e. ‘noiseless’ transitions). So in other words, the agent will have a veridical representation of the “rules” of the environment, as encoded in the arrays A_gp and B_gp of the generative process.

A_gm = copy.deepcopy(A_gp) # make a copy of the true observation likelihood to initialize the observation model

B_gm = copy.deepcopy(B_gp) # make a copy of the true transition likelihood to initialize the transition model

Important Concept to Note Here!!!

It is not necessary, or even in many cases important , that the generative model is a veridical representation of the generative process. This distinction between generative model (essentially, beliefs entertained by the agent and its interaction with the world) and the generative process (the actual dynamical system ‘out there’ generating sensations) is of crucial importance to the active inference formalism and (in our experience) often overlooked in code.

It is for notational and computational convenience that we encode the generative process using A and B matrices. By doing so, it simply puts the rules of the environment in a data structure that can easily be converted into the Markovian-style conditional distributions useful for encoding the agent’s generative model.

Strictly speaking, however, all the generative process needs to do is generate observations that are ‘digestible’ by the agent, and be ‘perturbable’ by actions issued by the agent. The way in which it does so can be arbitrarily complex, non-linear, and unaccessible by the agent. Namely, it doesn’t have to be encoded by A and B arrays (what amount to Markovian, conditional probability tables), but could be described by arbitrarily complex nonlinear or non-differentiable transformations of hidden states that generate observatons.

Introducing the Agent() class

In pymdp, we have abstracted much of the computations required for active inference into the Agent class, a flexible object that can be used to store necessary aspects of the generative model, the agent’s instantaneous observations and actions, and perform action / perception using functions like Agent.infer_states and Agent.infer_policies.

An instance of Agent is straightforwardly initialized with a call to the Agent() constructor with a list of optional arguments.

In our call to Agent(), we need to constrain the default behavior with some of our T-Maze-specific needs. For example, we want to make sure that the agent’s beliefs about transitions are constrained by the fact that it can only control the Location factor - not the Reward Condition (which we assumed stationary across an epoch of time). Therefore we specify this using a list of indices that will be passed as the control_fac_idx argument of the Agent() constructor.

Each element in the list specifies a hidden state factor (in terms of its index) that is controllable by the agent. Hidden state factors whose indices are not in this list are assumed to be uncontrollable.

controllable_indices = [0] # this is a list of the indices of the hidden state factors that are controllable

Now we can construct our agent…

agent = Agent(A=A_gm, B=B_gm, control_fac_idx=controllable_indices)

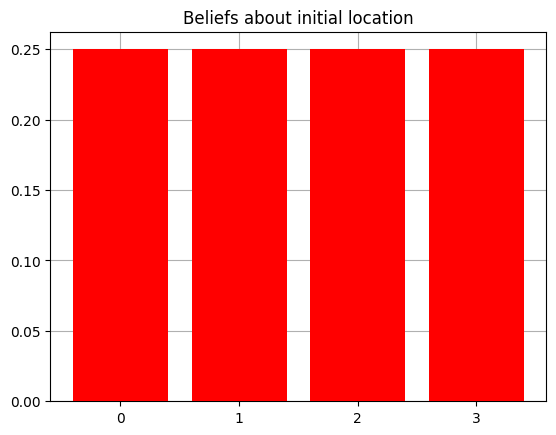

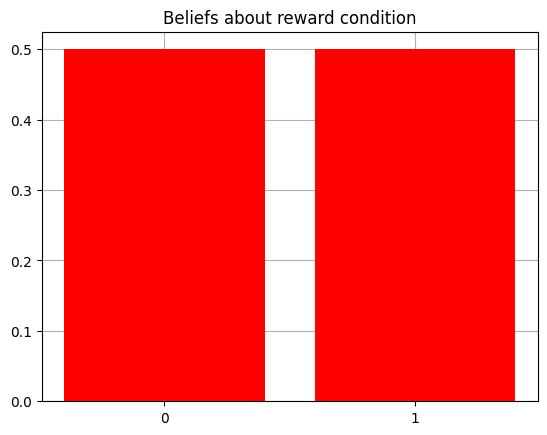

Now we can inspect properties (and change) of the agent as we see fit. Let’s look at the initial beliefs the agent has about its starting location and reward condition, encoded in the prior over hidden states $P(s)$, known in SPM-lingo as the D array.

plot_beliefs(agent.D[0],"Beliefs about initial location")

plot_beliefs(agent.D[1],"Beliefs about reward condition")

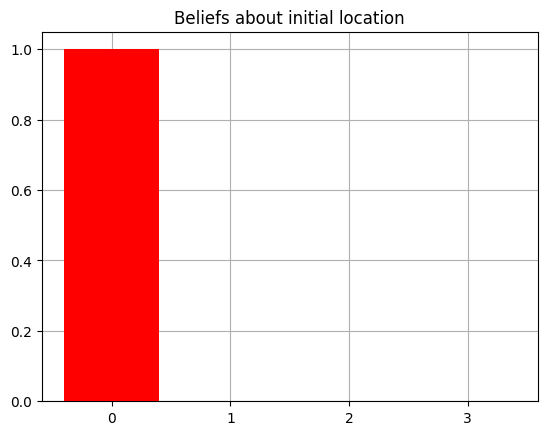

Let’s make it so that agent starts with precise and accurate prior beliefs about its starting location.

agent.D[0] = utils.onehot(0, agent.num_states[0])

The onehot(value, dimension) function within pymdp.utils is a nice function for quickly generating a one-hot vector of dimensions dimension with a 1 at the index value.

And now confirm that our agent knows (i.e. has accurate beliefs about) its initial state by visualizing its priors again.

plot_beliefs(agent.D[0],"Beliefs about initial location")

Another thing we want to do in this case is make sure the agent has a ‘sense’ of reward / loss and thus a motivation to be in the ‘correct’ arm (the arm that maximizes the probability of getting the reward outcome).

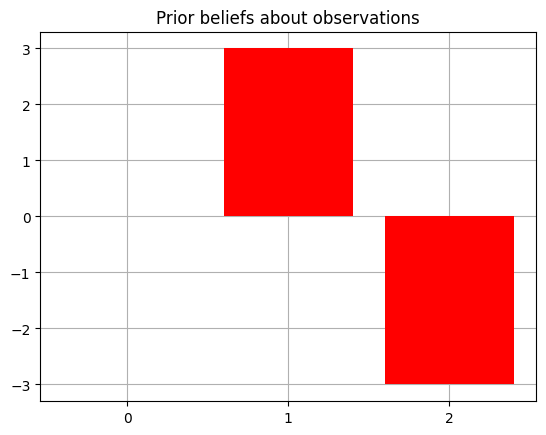

We can do this by changing the prior beliefs about observations, the C array (also known as the prior preferences ). This is represented as a collection of distributions over observations for each modality. It is initialized by default to be all 0s. This means agent has no preference for particular outcomes. Since the second modality (index 1 of the C array) is the Reward modality, with the index of the Reward outcome being 1, and that of the Loss outcome being 2, we populate the corresponding entries with values whose relative magnitudes encode the preference for one outcome over another (technically, this is encoded directly in terms of relative log-probabilities).

Our ability to make the agent’s prior beliefs that it tends to observe the outcome with index 1 in the Reward modality, more often than the outcome with index 2, is what makes this modality a Reward modality in the first place – otherwise, it would just be an arbitrary observation with no extrinsic value per se.

agent.C[1][1] = 3.0

agent.C[1][2] = -3.0

plot_beliefs(agent.C[1],"Prior beliefs about observations")

Active Inference

Now we can start off the T-maze with an initial observation and run active inference via a loop over a desired time interval.

T = 5 # number of timesteps

obs = env.reset() # reset the environment and get an initial observation

# these are useful for displaying read-outs during the loop over time

reward_conditions = ["Right", "Left"]

location_observations = ['CENTER','RIGHT ARM','LEFT ARM','CUE LOCATION']

reward_observations = ['No reward','Reward!','Loss!']

cue_observations = ['Cue Right','Cue Left']

msg = """ === Starting experiment === \n Reward condition: {}, Observation: [{}, {}, {}]"""

print(msg.format(reward_conditions[env.reward_condition], location_observations[obs[0]], reward_observations[obs[1]], cue_observations[obs[2]]))

for t in range(T):

qx = agent.infer_states(obs)

q_pi, efe = agent.infer_policies()

action = agent.sample_action()

msg = """[Step {}] Action: [Move to {}]"""

print(msg.format(t, location_observations[int(action[0])]))

obs = env.step(action)

msg = """[Step {}] Observation: [{}, {}, {}]"""

print(msg.format(t, location_observations[obs[0]], reward_observations[obs[1]], cue_observations[obs[2]]))

=== Starting experiment ===

Reward condition: Left, Observation: [CENTER, No reward, Cue Left]

[Step 0] Action: [Move to CUE LOCATION]

[Step 0] Observation: [CUE LOCATION, No reward, Cue Left]

[Step 1] Action: [Move to LEFT ARM]

[Step 1] Observation: [LEFT ARM, Reward!, Cue Right]

[Step 2] Action: [Move to LEFT ARM]

[Step 2] Observation: [LEFT ARM, Reward!, Cue Left]

[Step 3] Action: [Move to LEFT ARM]

[Step 3] Observation: [LEFT ARM, Reward!, Cue Right]

[Step 4] Action: [Move to LEFT ARM]

[Step 4] Observation: [LEFT ARM, Reward!, Cue Left]

The agent begins by moving to the CUE LOCATION to resolve its uncertainty about the reward condition - this is because it knows it will get an informative cue in this location, which will signal the true reward condition unambiguously. At the beginning of the next timestep, the agent then uses this observaiton to update its posterior beliefs about states qx[1] to reflect the true reward condition. Having resolved its uncertainty about the reward condition, the agent then moves to RIGHT ARM to maximize utility and continues to do so, given its (correct) beliefs about the reward condition and the mapping between hidden states and reward observations.

Notice, perhaps confusingly, that the agent continues to receive observations in the 3rd modality (i.e. samples from A_gp[2]). These are observations of the form Cue Right or Cue Left. However, these ‘cue’ observations are random and totally umambiguous unless the agent is in the CUE LOCATION - this is reflected by totally entropic distributions in the corresponding columns of A_gp[2] (and the agents beliefs about this ambiguity, reflected in A_gm[2]. See below.

plot_likelihood(A_gp[2][:,:,0],'Cue Observations when condition is Reward on Right, for Different Locations')

plot_likelihood(A_gp[2][:,:,1],'Cue Observations when condition is Reward on Left, for Different Locations')

The final column on the right side of these matrices represents the distribution over cue observations, conditioned on the agent being in CUE LOCATION and the appropriate Reward Condition. This demonstrates that cue observations are uninformative / lacking epistemic value for the agent, unless they are in CUE LOCATION.

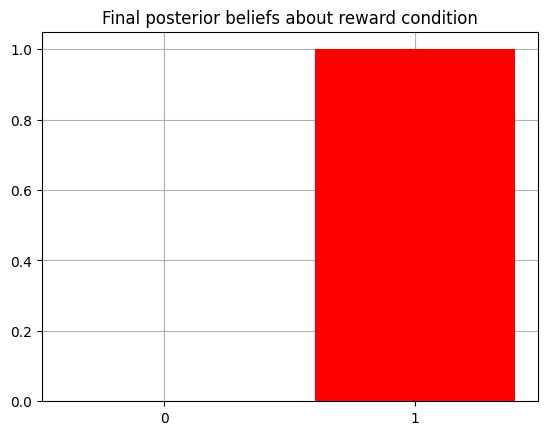

Now we can inspect the agent’s final beliefs about the reward condition characterizing the ‘trial,’ having undergone 10 timesteps of active inference.

plot_beliefs(qx[1],"Final posterior beliefs about reward condition")